The Internet of Things might also be thought of as the internet of interoperable things. A systematic testing regime can help uncover problems when devices work in concert.

DELMAR HOWARD, INTERTEK GROUP PLC

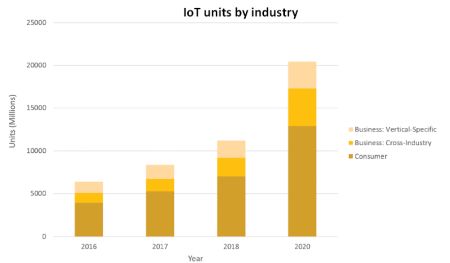

The Internet of Things (IoT) is growing at an exponential rate. In three years, the number of IoT-enabled products is expected to nearly triple from 8.4 billion to 20 billion. Additionally, there are already more than 450 IoT platforms currently on the market.

Many IoT manufacturers have proprietary protocols, making it difficult to ensure IoT end-products work together in their intended environments. Additionally, IoT manufacturers must account for future updates and security patches, device upgrades, and user experience and expectations. Perhaps one of the biggest challenges for IoT-enabled products is an absence of standard guidelines to help ensure interoperability, leaving risk assessment, testing, and action to individual manufacturers.

There are numerous risks that any IoT-enabled device may encounter. They include vulnerabilities in the software running on other devices connected to the networks, access control through the network and other devices, potential eco-system disruptions, vulnerabilities in default and/or hard-coded credentials, no clear path to update legacy firmware, sending data in unencrypted text, open ports vulnerable to data breaches, interference from other products, signals or electronics; and cybersecurity concerns of other devices and networks.

Testing devices for interoperability ensure that products work together securely, without sacrificing performance. In the world of cybersecurity analysis, information security management systems utilize the four-stage “Plan, Do, Check, Act” system. This methodology can also be employed to test interoperability.

Plan: This phase involves identifying improvement opportunities within a product and its systems. Evaluating the current process and pinpointing causes of failures will allow you to mitigate risk and address issues. Start the process by identifying the test type. Typical test types might include performance, security, compatibility or an ad-hoc approach. Each IoT device may require its own special considerations, so particularities of the product itself, as well as its intended environment and use, can factor into test plans.

It’s important to keep in mind that testing should include a mix of both automated and manual testing; it should also include negative testing as a complement to positive evaluations.

Interoperability test conditions should not be limited to the individual app or DUT (device under test); they should factor in how the DUT interacts with all the applications in the system.

And as plans develop, it can be helpful to create a data repository that can serve as a reference for future products and for updates or upgrades of the current device. Some developers create an RTM (Requirements Traceability Matrix) to help keep track of test cases and test conditions and acceptance test conditions/requirements for individual test cases.

The RTM basically helps ensure 100% test coverage. Here 100% coverage simply means the tests laid out in the plan cover every device function. The RTM helps in this endeavor by creating a snapshot to identify coverage gaps. Testers usually start the RTM by writing their test scenarios and objectives, then the test cases. For each test scenario, there will be one or more test cases. As tests proceed, the RTM expands to include test-case execution status and defects.

Do: At this step in the process, it is time to conduct evaluations and assessments; collect analytics and data; document any issues and failures. The Do step includes executing test cases, logging defects, getting them resolved, retesting and regression testing (described later) of the system as a whole. It is important to keep all the information on hand for use in the redesign, as well as for future product development initiatives.

The “Plan” and “Do” phases can require a good deal of time and effort, especially because the steps involved will vary depending on factors such as the operating environment. A medical device intended for use in an operating room, for example, requires different considerations than a consumer electronic device in the home. A server or automobile would undergo a different process than that for wireless speakers or a tablet.

When it is time to put the test plan into action, several evaluations can help gauge interoperability issues.

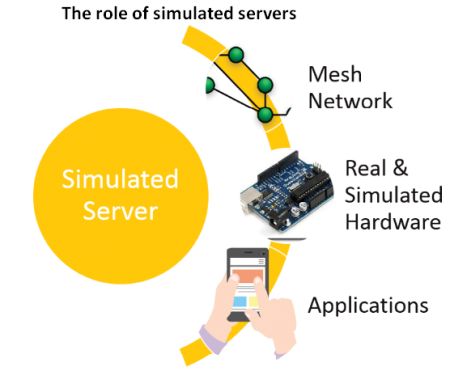

Simulation lets you test an IoT-enabled product or app without using real boards or servers. The method is particularly effective for large environments. It requires basic programming knowledge, but templates can help replicate specific tests. The simulated server allows you to evaluate scale, security, and reliability when accounting for other devices, traffic, interference, data loads or other concerns.

Usability evaluations account for the end-user, considering human factors as opposed to machine interactions. In many IoT products, human factors can often be overlooked during the prototyping phase. Yet, human factors can be at odds with how a product operates. Assessments for usability help ensure a product provides an enjoyable, seamless user experience.

Testing for a device’s overall performance can be a straightforward assessment. There’s usually no preset standard protocol for performance testing, but it generally involves assigning a certain number of user interactions over a specified time period. The difficulty comes in identifying bottlenecks and deciding how to fix them.

It is important to ensure that previously developed (and tested) software performs correctly after it has been altered or connects with other software. This is especially important with IoT products because they interact with each other. It is also critical when new features are added during development. Common methods of regression testing include re-running previously completed tests, checking to see whether programs behave differently, and verifying previously fixed faults have not re-emerged. Regression testing can often be automated and can complement other tests. It is a structured assessment but is essential in continuous quality models like “Plan, Do, Check, Act”.

Data breaches are a constant concern with connected products. IoT devices can be vulnerable to cybersecurity issues, including those arising from software defects, open ports, and unencrypted communications, among other things. In the U.S., ANSI/UL 2900 was adopted in 2017 for software security. It highlights testing for vulnerabilities, software weakness, and malware in networked components. It applies to products that include ATMs, fire alarm controls, network-connected locking devices, smoke and gas detectors, burglar alarms, and numerous others.

Check: During the “check” phase, any results from the previous stages must be reviewed and analyzed. With the analysis completed, it is time to identify whether improvements can be made or whether corrections from previous tests were successful. If there are still issues to be addressed, you will need to return to the “Plan” and “Do” phases. Specifically, developers may refer to the RTM and check whether the design has met all the expected requirements and that tests exercised all the vulnerable functions.

Act: Based on the observations and failures of previous stages, change anything that did not work and continue any practices that were effective. It is important to iterate the PDCA process until a product meets interoperability requirements satisfactorily.

It is important to remember that products on the market still require vigilance for security and interoperability. To that end, you should plan to issue regular updates, upgrades, and patches with a well-defined testing and release process. Such practices will help protect against zero-day style exploits and keep the product relevant as new software and hardware capabilities emerge.

With no specific interoperability standards in place to ensure products work seamlessly together, manufacturers can still take steps to help protect their devices, their reputation, and their brand.

References

Intertek Group plc

Gartner Says 8.4 Billion Connected “Things” Will Be in Use in 2017, Up 31% From 2016.

Security Sales and Integration. Toll Brothers Builds Smart Home Appeal in New Construction Market.

Leave a Reply

You must be logged in to post a comment.