If an instrument is to give accurate measurements, it must first have been calibrated to a standard.

In metrology, a standard (or etalon) is an object, system, or experiment that bears a defined relationship to a unit of measurement of a physical quantity. Standards are the fundamental reference for a system of weights and measures, against which all other measuring devices are compared.

For example, consider the standard for length. The base SI Units and the current standard for length is the meter. It is defined as the length of the path traveled by light in vacuum during a time interval of 1/299792458 of a second.

Thus our standard for length depends on how accurately we can measure the speed of light. It is interesting to explore how we came to measure the speed of light accurately enough to use it as a standard measure.

Ancient thinkers had inquiring minds and were inclined to investigate the world around them including the enigmatic phenomenon of light. But real progress toward a complete understanding of its dual nature and speed of propagation eluded theoreticians and experimenters for many centuries. And even today some details remain baffling. For many centuries up to and including Descartes it was held that light traveled instantaneously.

In contrast, the relatively slow speed of sound (in dry air at sea level 768 mph) was well known. It had been observed that the flash from an artillery piece was seen “immediately” and the sound was delayed, more so as its distance from the observer rose. This was taken to confirm that light traveled instantaneously, although in reality all that is proven is that the speed of light is greater than the speed of sound.

Ancient scientists were well aware of the nature of sound. To them, it was evident that a vibrating body imparted wavelike oscillations in the air which caused the ear drum to vibrate at the same frequency and corresponding intensity. And it was assumed that nerves carried the auditory information to the brain where it was perceived as sound. Later, the same process was attributed to luminous bodies, light and the eye, although light was still believed to travel instantaneously.

Galileo devised a method for measuring the speed of light. It consisted of having two individuals at various distances apart, both carrying covered lanterns. One uncovers the lantern and on seeing the light, the other does likewise. The first experimenter then measures the time elapsed. Based on the distance that separates them, the speed of light could be deduced. This experiment, actually performed by Galileo at a distance of one mile, was of course inconclusive, but it recognized the fact that such experiments would have to be two-way. A one-way speed-of-light measurement would not be feasible because of the difficulties in synchronizing the two clocks.

The first successful approximation of the speed of light took place on an astronomical scale, where the considerable distance involved resulted in an observable time interval. In 1676, Ole Römer, a Danish astronomer then working at the Paris Observatory, was engaged in an intensive study of Io, a moon of Jupiter.

It is the nature of a satellite that is in a circular orbit to revolve about the parent body at a steady rate. But Römer’s observation indicated something quite different. With respect to an earthly observer, Io passed behind Jupiter periodically, blinking out of view. What was unexpected is that for several months these eclipses happened farther and farther behind schedule. Then they accelerated for a corresponding amount of time.

It is the nature of a satellite that is in a circular orbit to revolve about the parent body at a steady rate. But Römer’s observation indicated something quite different. With respect to an earthly observer, Io passed behind Jupiter periodically, blinking out of view. What was unexpected is that for several months these eclipses happened farther and farther behind schedule. Then they accelerated for a corresponding amount of time.

Seventeenth-century astronomers were astute and mathematically sophisticated. It did not take Römer long to come up with an explanation. The variations in Io’s orbital periodicity were due to changes in distance between earth and Jupiter, a consequence of their unequal orbits. As they became closer, light had less distance to travel from the objects under investigation to the observers, hence an apparent shortening in Io’s interval between eclipses.

From this insight, it was easy to calculate the speed of light based on the distance between the earth and the sun, which at the time was known. Römer calculated that light required 22 minutes to cross earth’s orbit, which would imply that (deducting the sun’s radius and the earth’s radius) it took a little less than eleven minutes to travel from the sun’s surface to the earth’s surface. That figure is reasonably close to our current figure of eight minutes and twenty seconds.

To find the speed of light using astronomical methods, it was necessary to know both the distance involved and the time required to traverse that distance. All relative distances in the known parts of the solar system had been ascertained prior to the 1670s, using observation and geometrical analysis. Now astronomers endeavored to find the absolute distance from Earth to Mars, whereupon everything would fall into place.

This was accomplished by observing Mars simultaneously at two distant locations on the earth’s surface. Parallax observations, the difference in the position of Mars with respect to the relatively fixed background of stars, could provide the key piece of information, the distance between Earth and Mars, which, because relative distances were known, would reveal the distance of the Earth from the sun. Then, the speed of light could be calculated.

Shortly thereafter, Römer or his contemporary, Christiaan Huygens, calculated the speed of light at 125,000 miles/sec., about 75% of our current figure. The discrepancy arose entirely from Römer’s error in calculating the time required for light to traverse earth’s orbit. Nevertheless, these late Renaissance thinkers were closing in on the correct value of the speed of light according to our current notion.

James Bradley (1693-1762), an eminent English astronomer, announced in 1728 his discovery of the aberration of starlight, a slight shift in the position of stars caused by the annual motion of earth, not to be confused with the phenomenon of parallax. This led to a further refinement in our knowledge of the speed of light By measuring the angular variation in the incoming direction of starlight in conjunction with the earth’s position in its circular orbit around the sun, Bradley arrived at a new and more accurate figure for the speed of light: 185,000 miles per second, which differs by less than one percent from our currently held value.

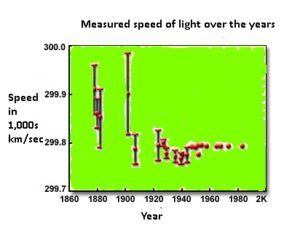

Increasingly accurate measurements of the speed of light were made by two French rivals, Hippolyte Fizeau and Jean Léon Foucault around 1850 who used rapidly rotating toothed wheels and rotating mirrors respectively to break up light rays so that their speed could be measured. These methods were successful and resulted in closer approximations, but they were surpassed by those of Albert Michelson (1852-1931), well known as the theoretician who devised the Michelson-Morley experiment, an unsuccessful experiment to detect a luminiferous aether.

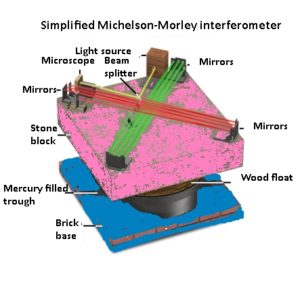

Michelson was intensely interested in determining the speed of light. He adopted Foucault’s spinning mirror apparatus on a larger scale and with better optics, which yielded a figure of 186,300 miles per second for the speed of light. Michelson was acclaimed at the time for establishing the speed of light to great accuracy, but now he is better known for his role in the Michelson-Morley experiment. In this endeavor, Michelson was the theoretician and Edward Morley (1838-1923), a master mechanic as well as scientist, built the instrumentation.

Michelson was intensely interested in determining the speed of light. He adopted Foucault’s spinning mirror apparatus on a larger scale and with better optics, which yielded a figure of 186,300 miles per second for the speed of light. Michelson was acclaimed at the time for establishing the speed of light to great accuracy, but now he is better known for his role in the Michelson-Morley experiment. In this endeavor, Michelson was the theoretician and Edward Morley (1838-1923), a master mechanic as well as scientist, built the instrumentation.

It was believed at the time, in part correctly, that light consisted of wavelike perturbations that traveled through space, analogous to the propagation of sound through air or other material media such as water. Successive changes in density constituted the waves. It followed that there must be a luminiferous material pervading space, to carry these vibrations. Moreover, it was known that the earth traveled rapidly through space. It seemed evident that due to the earth’s motion, the apparent speed of light would differ depending on whether it was measured with or against the hypothetical wind experienced as the observer traveled through the “aether”.

In the popular imagination, Michelson and Morley were located on mountaintops some distance apart and made their measurements as Galileo envisioned using covered lanterns, although with much more precise instruments. In actuality, the experiment was confined to a single room in Cleveland, Ohio where with one semi-silvered mirror and other fully reflective mirrors, a beam of light from a stable source was made to interfere with another emanation from the same source that was made to travel a longer path, and the diffraction pattern was observed. The entire apparatus could be made to rotate on a pool of mercury, so as to change alignment with respect to the Earth’s “absolute” motion through space.

To their astonishment, the researchers were unable to detect an aether wind. In other words, light traveled at the same apparent speed regardless of whether it was measured with or against the direction of Earth’s travel. This well-publicized result threw classical, i.e. Newtonian, physics into an untenable uproar, which was not resolved until Albert Einstein’s Special Theory of Relativity of 1905.

Leave a Reply

You must be logged in to post a comment.