Accuracy, precision, and resolution are key parameters, each with a distinct meaning and implication with respect to system design and confidence.

Design, test, and measurement implications

Low or moderate accuracy may seem a detriment, but that isn’t necessarily the case. If precision is good enough and there is a way to calibrate the readings, the error inaccuracy can be taken into account and thus accommodated. This can be done by adjusting the instrument itself or via numerical adjustments in the acquired data after it has been acquired.

The latter is often done when instrumentation is sent to a calibration lab. The unit being evaluated is compared to a better standard, and its accuracy error is noted on the calibration report. This deviation can then be factored into the test results.

Inaccuracy is not necessarily determined solely against a single reference value. For example, many sensors are non-linear (accuracy) but consistent (precision) in their response to physical stimulation, with thermocouples used for temperature measuring being a very common case. This repeated error can be taken out by using published correction and calibration tables such as those published by NIST (National Institute of Standards and Technology) in the US.

This can be done using curve-correction circuitry (done in the older, mostly analog days), or by applying curve-fitting equations or using a look-up table on the raw data. Thus, the inaccurate thermocouple is transformed into an accurate sensor.

Assessing the tradeoffs

It’s simplistic to say that you want a system with a trifecta of high accuracy, precision, and resolution—and all at the same time. However, achieving all three simultaneously is usually costly and technically challenging.

The real question is not only how much of each you really need but what tradeoffs are you willing to make among the three factors. Another question will be, is there a point where you cross a threshold in difficulty, such that achieving that extra little bit of improvement (no pun intended) incurs a dramatic step-up for the challenge?

A consumer weight scale, for example, may need a resolution of only 0.5 pounds (0.2 kg), but users would probably appreciate reasonably good accuracy—perhaps to one pound) along with high repeatability. If they step on the scale ten times in a row, they expect to see the same reading, even if the accuracy was only about a pound. After all, that precision is something they will easily see, while the accuracy of a scale is harder to check at home to see if that actual number was in error by one-half pound or even a pound. Further, they may be more interested in daily weight gain/loss than the absolute value.

Achieving higher resolution usually requires careful and even extreme attention to every detail in the system or signal chain, including sensor, signal conditioning, power-supply noise, EMI, temperature-related drift, and more.

Somewhat counterintuitively, there is a way to use noise in a system to enhance and achieve higher resolution despite a lower-resolution design or implementation. This is done by adding pseudorandom white noise from an analog or digital source to the channel and taking multiple readings, and then applying a weighted averaging algorithm.

This way, you can achieve another bit or two of resolution depending on how many repeated readings you have time to make. Since the resolution is finite, there will be some signal values that are on or very close to the border between two readings. If you add the noise, the reading will “dither” between the two values. The ratio of readings that fall on one side or the other due to the added noise can be calculated and used to increase apparent resolution.

Of course, this only works if the measured signal is static during repeated measurements, such as a weight on a scale. In addition to that requirement, the tradeoff is the need to perform those conversions (more time) and more post-signal numerical processing.

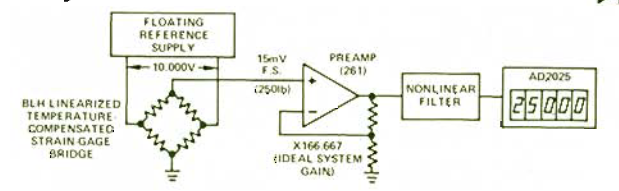

An example of the art and skill needed to simultaneously achieve high accuracy, precision, and resolution in a project is the weight scale designed and built in 1976—yes, nearly 50 years ago—by the late analog-circuit legend Jim Williams. While working at the MIT Nutrition Lab very early in his career, he was given the challenge of building a scale on which persons ranging from babies to adults could be weighed to measure each bite of food they ate, even a tiny one.

The scale had to resolve 0.01 pounds over a 300-pound full-scale range (equivalent to 30 parts per million or a small donut bite for a 300-pound person), have an absolute accuracy within 0.02 percent, be portable, and never require calibration or adjustment. Every subtle source of imprecision, error, drift or inaccuracy had to be identified and eliminated or at least greatly minimized to achieve this level of this performance based on a carefully constructed topology (Reference 2 and Figure 1).

[Side note: I interviewed Jim for EDN about 20 years ago, asking what he would do differently given the many new components, processors, design tools, and more that had become available. That interview is “lost” in their online archives. Still, I recall him saying that while there were some things he would change, many of the choices he made were due to fundamentals of accurate, precise, high-resolution measurement, component realities, and basic physics, and so would remain the same.]

How do you determine the balance between accuracy, precision, and resolution? The answer is the expected one: it depends. There are many applications for which higher performance in one or perhaps two of these factors is essential, while you don’t need or can accept lesser performance in the other(s).

This requires a careful assessment of the system’s real, not desired, needs, and making sure you don’t confuse the true meaning of each of the three parameters—although they often interact with each other to the extent that varies with the application. In short, there usually is no easy answer. In many cases, “less is more” or at least easier to achieve and just as meaningful.

Unneeded over-specifying may look good “on paper” and seem to provide some performance cushion or a comfort factor, but it often leads to major unexpected design headaches. You also have to figure out how you will test the accuracy, precision, and resolution with an adequate level of confidence, which may be a major stumbling block as well.

Related EE World Content

First, go for accuracy, then precision

What causes noise in analog designs, and how can it be controlled?

A look at intrinsic broadband noise spectral density

Electrical noise, Part 2: Additional perspectives

What are accuracy, error, and repeatability in sensors?

MEMs pressure sensor enhances accuracy and avoids calibration

External References

- Physics Today, “A more fundamental International System of Units”

- Jim Williams, EDN, “This 30-ppm scale proves that analog designs aren’t dead yet”

- Texas Instruments, “The secret of using noise to improve your ADC’s performance”

- Analog Devices, “Chapter 20: Analog to Digital Conversion”

- Photonics, “Understanding Resolution, Accuracy, and Repeatability in Micromotion Systems”

Leave a Reply

You must be logged in to post a comment.