All measurements need reliable, accurate equipment that’s traceable to worldwide standards. Without that, measurements have no value.

From ensuring product quality in a factory to safety to letting every individual use a computer or phone, accurate measurements are critical in every aspect of our lives. In the automotive industry, accurate measurements of components and the tolerances around them ensure that the parts built fit together and that the vehicle is not only drivable but safe. In electronics, accurate measurements of components’ electrical characteristics ensure that the product works and meets its specifications.

For a device to meet those specifications, it needs measurement. That means measurement equipment. How do you know if the measurement equipment is operating within its required specifications, be those the manufacturer’s published specifications or tighter tolerances as needed? That’s where calibration and traceability come in.

Why is traceability important?

Every tool used to investigate and troubleshoot your electrical systems needs calibration. The calibration standards used to calibrate them must also be calibrated by equipment that is also calibrated to a much higher accuracy level. Each calibration step in the chain must trace all the way back to the calibration standards. This is metrology, the science of measurement.

The International Vocabulary of Metrology (VIM) provides a technical definition of calibration and the constants that go with it (Figure 1). To paraphrase: it is a two-step process to first establish a relationship between measurements made with a device and values provided by measurement standards or references. Each of these calibrations can form a part of how the VIM defines metrological traceability, adding to the measurement uncertainty of the measurement itself.

As you can see, a discussion of calibration and traceability can quickly become very technical, brought on by a string of definitions for us to understand how all the pieces fit together. For this discussion, we can simply think of traceability as a chain of measurements, instrument by instrument, that lead us back to the International System of Units (SI).

Standards are key

Metrology has evolved alongside technology. Every advancement in metrology requires and enables more precise measurement capabilities, leading to research, development, and manufacturing improvements. As measurement equipment becomes more accurate, the metrology industry develops better reference devices and methods. Looking back to MIL-STD-120 in 1950, we sought references such as voltage and resistance sources that were five or ten times more accurate than the device under test. By MIL-STD-45662A in 1988, that dropped to four times better.

In 1995 with ANSI/NCSL Z540.1, we began to ensure we were looking at the uncertainty of the whole measurement. That includes all factors affecting a measurement, not just the reference devices. This includes accessories and the calibration uncertainty of the reference devices, which correlate to traceability further up the chain. This further developed in risk-based evaluations with documents such as ANSI/NCSL Z540.3; using the risk of false acceptance of the equipment under calibration as the driving metric to ensure that our calibrations are adequate.

SI revisions

Each advancement in metrology pushes us forward, requiring a look back at the same time. Any calibrations or new measurement technologies require comparison against SI standards. Until recently, the SI was based in part on physical, artifact-based units that represented some of the base units (the kilogram, kelvin, ampere, and mole).

In 2019, the SI was revised to establish all seven base units of measure on physical constants, all of which are shown in Figure 1. This change was necessary because the original artifacts could erode. These physical artifacts were carefully stored and handled to prevent erosion, but over centuries it’s an inevitability. When it does happen, it can throw off any measurements made from the standard. That was just a level of uncertainty we had to live with. By adjusting the constants, that uncertainty has been removed. By referencing these units to unchanging physical phenomena, uncertainty has been reduced.

Calibration factors affect accuracy and uncertainty

Accuracy and uncertainty go hand in hand, though according to the VIM, uncertainty and accuracy are two distinct concepts. Uncertainty is a quantitative figure while accuracy is always considered qualitative. Something can be more accurate or less accurate than something else, but when expressing a value associated with performance, we use uncertainty.

Factors that affect measurement uncertainty, and ultimately accuracy, include the calibration standard used and the associated uncertainty of its own calibrated functions, the measurement instrument’s resolution, stability, repeatability, calibration interval, and the measurement environment. Each instrument used in the calibration chain has a spot in the hierarchy, with the SI sitting at the top. A digital multimeter, for example, is calibrated by a multifunction calibrator. That multifunction calibrator needs calibration by a more accurate multi-product bench calibrator in a calibration lab. In turn, that bench calibrator was calibrated by even more accurate references in one of the National Metrology Institutes (NMI) or other high-level laboratories — Primary Standards Labs — that house their own intrinsic standards that can serve as (realize) SI units themselves.

The references used in these labs can be traced to the SI either as direct representations of the base units or through the calibration process. You can follow as the SI expands from the standards, its physical constants, to calibration instruments that have been compared directly to the standards throughout the industries. Think of the SI at the top of a pyramid, as shown in Figure 2, where everything below the tip helps pass the SI down to all levels of use. Below the SI sits the BIPM and National Metrology Institutes (NMI), or the groups that facilitate the promotion of the SI within a country. Every lab or person within a country, however, can’t work directly with the NMIs. NMI-level calibration standards are used to calibrate primary calibration standards or instruments. Those primary calibration standards are then used to calibrate secondary standards. Those are then used to calibrate working standards. Each of these levels allows for the SI standards to be efficiently and affordably disseminated down the calibration chain.

The need for traceability

Traceability is the process of documenting the calibration chain’s hierarchy to the SI. Documenting each step along the way, ensuring that each instrument’s accuracy level is within the acceptable uncertainty limit for the equipment and its work demands.

As you head down the calibration chain, or the pyramid in Figure 2, every link or block needs to be connected to the previous one so the calibration performed at any level can be traced up and attached to the SI; this is called traceability. Industries rely on tracking these series of calibrations performed against more and more accurate tools to ensure their measurements are correct. Again, keeping the standards constant around the world and being affordably disseminated.

Calibrations need not be traceable strictly through a National Metrology Institute (NMI) — NIST in the U.S. or NPL in the U.K. — or specifically your own country’s NMI. The ultimate source of truth for all measurements is the SI, and we can follow an unbroken chain of calibrations from the measurements used by industry tools to these seven base units in a variety of ways.

In the Calibration industry, we often hear the misnomer of a “NIST Traceable” calibration. Our true goal is what the VIM defines as metrological traceability to a measurement unit; “metrological traceability where the reference is the definition of a measurement unit through its practical realization.”

Taking this information one step further, documenting each step in the process, every link in the chain is traceable. Following a series of tools and calibrators right up the chain to the SI helps to show within what uncertainty level each tool’s accuracy sits, meaning you’ll know you can get an accurate measurement to the digit you need. Any more accuracy is wasting money on excessive calibration. Traceability steps form a chain of calibrations from the SI on down to each industry.

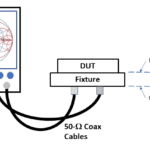

For an example of the calibration hierarchy and its traceability, see Figures 3 and 4. In a facility (Figure 3), a clamp meter is calibrated by a multifunction calibrator. That multifunction calibrator was sent to a calibration lab to be calibrated against a more accurate bench calibrator or measurement standard (Figure 4). From there, the next link in the chain is that the measurement standard was calibrated by more accurate references in one of the NMI. Finally, the references used in the NMI have been compared directly against the SI standards to determine the best possible accuracy.

Accurate measurements

We can see the tremendous changes in measurement capability over the years. One of my favorite examples is GPS technology. Looking back 30 years, early GPS sensors could provide location data within about 100 m. By 2000, that had improved to be about 10 m, continuing over the next 20 years, and now we have modern sensors capable of measuring to within about 0.3 m. As we see these advancements in measurement technology, we must be aware of how to effectively verify these devices with traceability back to the SI or the measurements lose their meaning.

Part of what brought on the dramatic advancement of GPS accuracy goes beyond the sensor technology itself. Alongside more accurate sensors, we find a wealth of data being leveraged to provide other analyses and increase the usability of measurement data. As we work toward a unified digitization of measurement data, we will be able to leverage these same types of analytics to innovate and be increasingly proactive in our maintenance efforts.

The future of traceability

Traceability allows for broad dissemination of the standards while ensuring a tool will measure what it should. Keeping traceability records for every calibration is an important part of the process, not only for metrology as a science, but for the industries using the tools. Many technical and quality industry standards require proof of the unbroken chain to the pertinent SI units.

Currently, when a tool is calibrated, the calibration work done comes with a certificate as well as records of what measurements were tested and what steps were taken to calibrate. This certificate often includes a statement of traceability, or a list of calibration standards used for the calibration of that specific tool. So far, this process has led each lab to keep its own records. Plus, the actual statement varies from lab to lab, as not all calibration laboratories followed the same industry standards or fit in the same place on the calibration hierarchy. This process involves a lot of paperwork or printing out and storing documents in the hope you can find them if needed later.

With nearly everything becoming digital, so is traceability. Calibration labs are working to digitize calibration certificates. Doing so allows the industry, and each facility, to have a database of traceability for each instrument. The traceability pyramid can become a digital calibration chain, containing information about measurements performed on a tool, as well as what was used above, below, or level with.

The original desire behind creating the SI was one set of measurements “for all times, for all people.” Something accessible by everyone, for everyone. Digitizing calibration certificates, in partnership with the refreshed SI constants, brings the world one step closer to that idea.

Leave a Reply

You must be logged in to post a comment.